PrimateFace

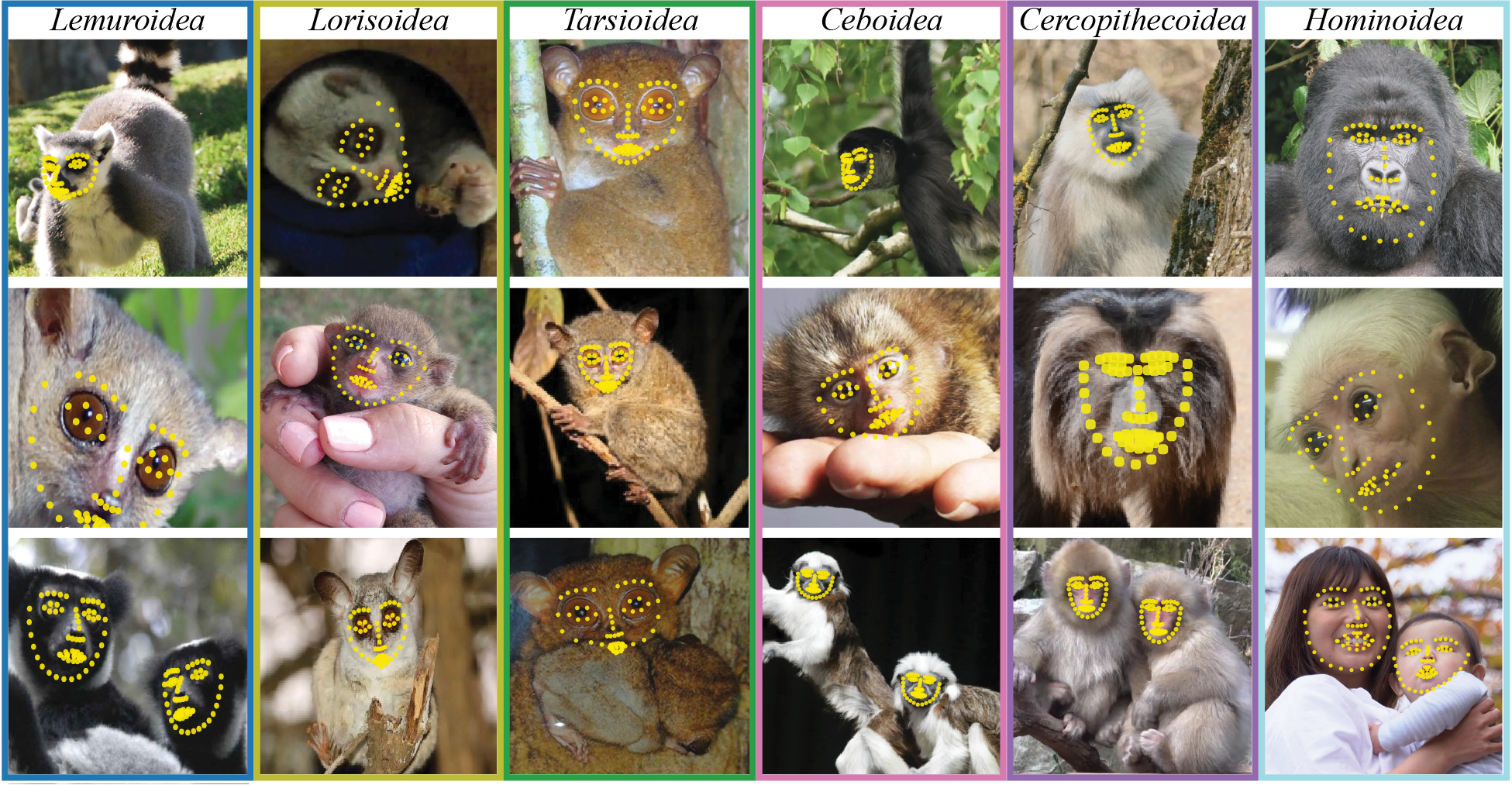

Cross-Species Primate Face Detection and Landmark Analysis

PrimateFace is an open-source toolkit for automated facial analysis across primate species. It provides pretrained models, datasets, and tools for face detection, landmark localization, and behavioral analysis in humans and non-human primates.

Quick Start¶

# 1. Create conda environment

conda env create -f environment.yml

conda activate primateface

# 2. Install PyTorch for your system

# Check your CUDA version: nvcc --version

uv pip install torch==2.1.0 torchvision==0.16.0 --index-url https://download.pytorch.org/whl/cu118

# 3. Install optional modules

uv pip install -e ".[dinov2,gui,dev]"

# 4. Test with demo

python demos/download_models.py

python demos/primateface_demo.py process --input ateles_000003.jpeg \

--det-config mmdet_config.py --det-checkpoint mmdet_checkpoint.pth

Getting Started¶

Installation¶

See our detailed installation guide or use the quick install above.

First Steps¶

- Try the demos → Quick inference guide

- Explore notebooks → Interactive tutorials

- Choose your workflow → Decision tree

- Read the paper → Scientific background

Project Components¶

Dataset¶

Comprehensive primate face dataset with annotations. - 68-point facial landmarks - 49-point simplified annotations - Bounding boxes and species labels

Models¶

Pretrained models optimized for primates. - Face detection (MMDetection, Ultralytics) - Pose estimation (MMPose, DeepLabCut, SLEAP) - Species classification (VLMs) - Landmark converters

Documentation¶

📚 Getting Started¶

🎓 Tutorials¶

Interactive notebooks demonstrating applications: - Lemur face visibility time-stamping - Macaque face recognition - Howler vocal-motor coupling - Gaze following analysis

📖 User Guide¶

Core Workflows¶

- Demos - Complete inference pipeline

- DINOv2 - Feature extraction & visualization

- GUI - Interactive annotation workflow

- Landmark Converter - Format conversion

Framework Integration¶

- MMPose/MMDetection - Primary framework

- DeepLabCut - Behavioral analysis

- SLEAP - Multi-animal tracking

- Ultralytics - Real-time detection

Utilities¶

- Evaluation - Model comparison & metrics

- Data Processing - Format converters

- Visualization - Plotting & analysis

Concepts¶

- Facial landmarks (68-point vs 48-point)

- DINOv2 features explained

- Evaluation metrics

🔧 API Reference¶

- Core APIs: Detection, Pose, Annotation

- Feature APIs: DINOv2, Converter, Evaluation

📊 Data & Models¶

- Pretrained model downloads

- Dataset specifications

- COCO format guide

🛠️ Troubleshooting¶

- Common issues and solutions

- Performance optimization

🤝 Contributing¶

- Submit your primate images

- Contribute to the dataset

Community¶

Get Help¶

- GitHub Issues: Report bugs

- Discussions: Ask questions

For pressing questions or collaborations, reach out via:

-

PrimateFace Email: primateface@gmail.com

-

Felipe Parodi Email: fparodi@upenn.edu

Resources¶

Citation¶

If you use PrimateFace in your research, please cite:

@article{parodi2025primateface,

title={PrimateFace: A Machine Learning Resource for Automated Face Analysis in Human and Non-human Primates},

author={Parodi, Felipe and Matelsky, Jordan and Lamacchia, Alessandro and Segado, Melanie and Jiang, Yaoguang and Regla-Vargas, Alejandra and Sofi, Liala and Kimock, Clare and Waller, Bridget M and Platt, Michael and Kording, Konrad P},

journal={bioRxiv},

pages={2025--08},

year={2025},

publisher={Cold Spring Harbor Laboratory}

}

License¶

This project is released under the MIT License for research purposes.